According to Reuters report, Microsoft Corporation intends to offer its cloud computing customers a platform of AMD(Advanced Micro Devices) AI chips that will keep up with components made by Nvidia, an AI chips manufacturer with a valuation of a trillion American dollars. The U.S. tech giant will provide details of the initiative at its upcoming Build developer conference next week.

In addition to the AMD offering, the company will unveil a preview of its new Cobalt 100 custom processors at the conference. The processors offer 40% better performance over other processors based on Arm Holdings’ technology, the report added.

Many companies including Adobe and Snowflake, which is a cloud-native platform have begun to use chips, indicating their potential to impact the cloud computing and AI technology sectors significantly.

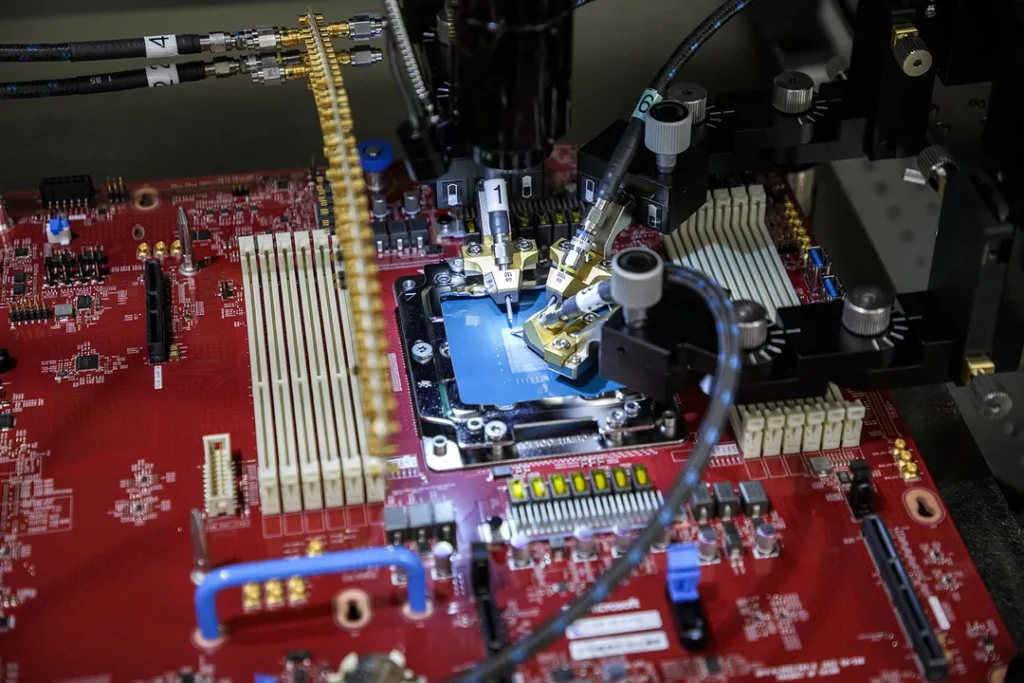

Microsoft’s clusters of AMD’s flagship MI300X AI chips will be integrated into Azure cloud computing service. Cloud customers of the company will be provided an alternative to Nvidia’s H100 family of powerful graphics processing units (GPUs) which dominates the data center chip market for AI.

The introduction of AMD’s MI300X chips and the Cobalt 100 processors shows Microsoft’s strategy to diversify its AI hardware offerings and enhance its competitive edge in the cloud computing market, USD

AMD anticipates USD 4 billion in AI chip revenue this year. The chips are powerful enough to train and run large AI models, said AMD.

In addition to Nvidia’s top-tier AI chips, Microsoft’s cloud computing unit offers access to its proprietary AI chips – Maia. Maia 100 AI accelerator, named after a bright blue star is an AI chip designed to run cloud AI workloads, like large language model training and inference.

Microsoft is currently testing its Cobalt chips, which were announced in November last year, on workloads like Power Teams and Microsoft’s messaging tool for businesses intending to compete with the in-house Graviton CPUs made by Amazon.

Among the other nuggets of news we learned is that Microsoft will drop its pricing for accessing and running large language models at Build next week. What exactly that will look like remains unclear, though.

Companies like Google, Amazon, Meta, and Microsoft which build AI models or run applications, concatenate multiple numbers of GPUs as the data and computation don’t fit on a single processor.

However, Microsoft’s move to integrate AMD’s MI300X chips into Azure is significant as it addresses the challenge of obtaining Nvidia’s high-demand H100 GPUs.

(With inputs from Reuters)